Anthropic has launched Claude Gov, a specialised artificial intelligence service designed exclusively for US defence and intelligence agencies, marking the company's latest push into the government sector.

The AI firm announced on Thursday that its new models have been custom-built to handle classified information and support national security operations, with "looser guardrails" compared to consumer-facing versions of Claude.

"What makes Claude Gov models special is that they were custom-built for our national security customers," said Thiyagu Ramasamy, head of public sector at Anthropic. "By understanding their operational needs and incorporating real-world feedback, we've created a set of safe, reliable, and capable models that can excel within the unique constraints and requirements of classified environments."

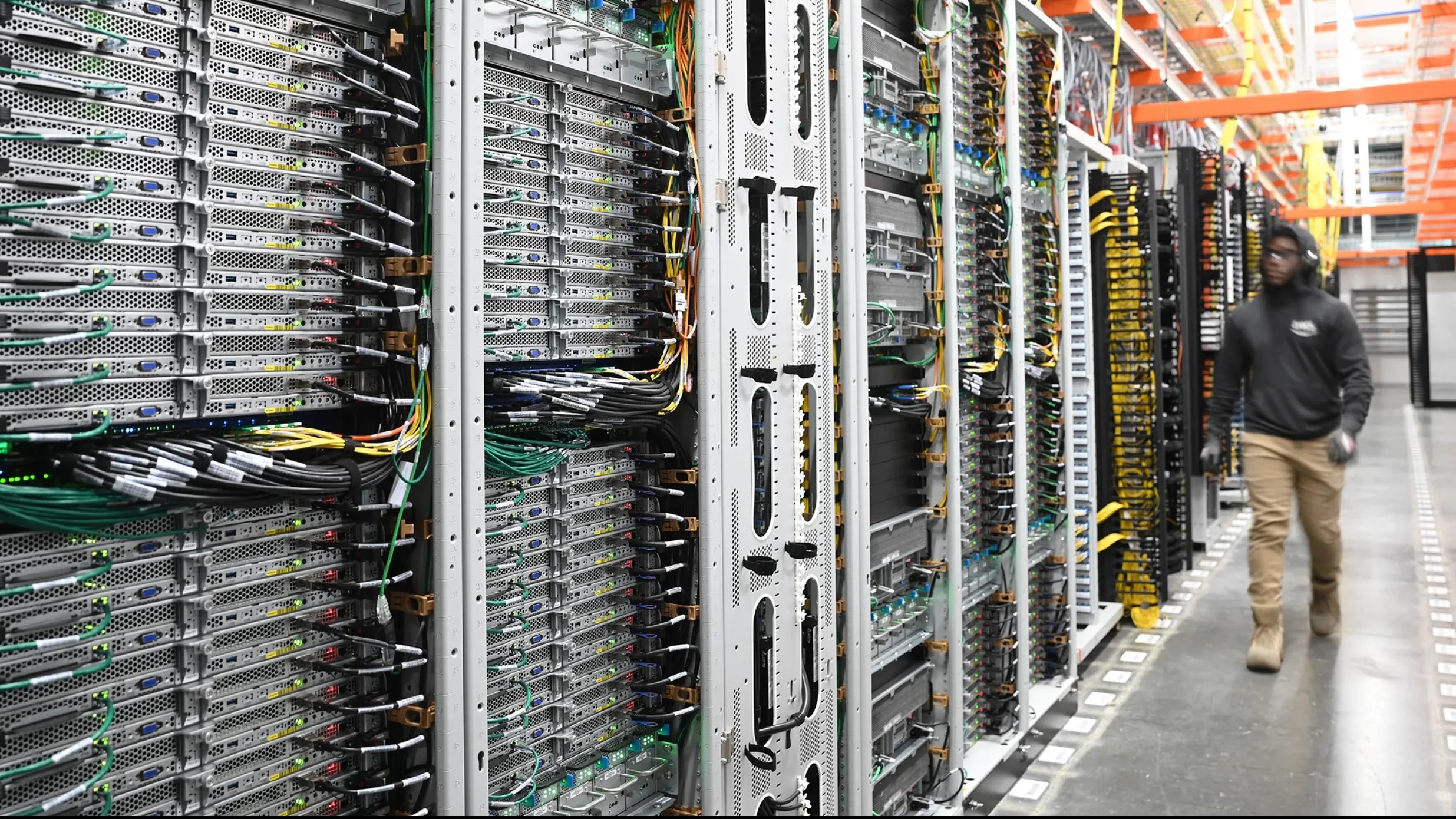

The models are already deployed by agencies "at the highest level of US national security," according to Anthropic, though the company declined to specify how long they have been in use or provide usage statistics.

Claude Gov models are designed to handle government-specific tasks including threat assessment, intelligence analysis, and strategic planning. Key features include improved handling of classified materials, with the models programmed to "refuse less when engaging with classified information" that consumer versions would typically flag and avoid.

The models also offer enhanced understanding of defence and intelligence documents, better proficiency in languages and dialects relevant to national security, and improved interpretation of complex cybersecurity data.

Access to Claude Gov will be limited to government agencies handling classified information, with the models capable of operating in top secret environments.

The launch follows the creation of contractual exceptions to Anthropic's usage policy at least eleven months ago, which are "carefully calibrated to enable beneficial uses by carefully selected government agencies." Whilst certain restrictions remain prohibited—including disinformation campaigns, weapons design, and malicious cyber operations—Anthropic can "tailor use restrictions to the mission and legal authorities of a government entity."

Claude Gov represents Anthropic's response to OpenAI's ChatGPT Gov, launched in January for US government agencies. OpenAI reported that over 90,000 federal, state, and local government employees had used its technology within the past year for tasks including document translation, policy memo drafting, and application building.

The move reflects a broader trend of AI companies seeking government contracts in an uncertain regulatory landscape. Scale AI recently signed deals with the Department of Defense and Qatar's government, whilst Anthropic participates in Palantir's FedStart programme for federal government-facing software deployment.

The use of AI by government agencies has faced scrutiny due to documented cases of bias in facial recognition systems, predictive policing, and welfare assessment algorithms, alongside concerns about impacts on minorities and vulnerable communities.

Latest News

-

Amazon considers largest contribution to OpenAI's $100bn fundraising round

-

HSBC rolls out new tax tool for sole traders as digital rules approach

-

Lloyds Banking Group doubles AI value target to £100m in 2026

-

Tesla to end Model S and Model X production as Musk shifts focus to robotics

-

ASA bans Coinbase adverts ‘trivialising risks’ of cryptocurrency

-

Gumtree rolls out Know Your Business technology

The future-ready CFO: Driving strategic growth and innovation

This National Technology News webinar sponsored by Sage will explore how CFOs can leverage their unique blend of financial acumen, technological savvy, and strategic mindset to foster cross-functional collaboration and shape overall company direction. Attendees will gain insights into breaking down operational silos, aligning goals across departments like IT, operations, HR, and marketing, and utilising technology to enable real-time data sharing and visibility.

The corporate roadmap to payment excellence: Keeping pace with emerging trends to maximise growth opportunities

In today's rapidly evolving finance and accounting landscape, one of the biggest challenges organisations face is attracting and retaining top talent. As automation and AI revolutionise the profession, finance teams require new skillsets centred on analysis, collaboration, and strategic thinking to drive sustainable competitive advantage.

© 2019 Perspective Publishing Privacy & Cookies

.jpeg)

Recent Stories